Joint robots have the characteristics of high flexibility, accurate positioning, and stable operation, so they are widely used in the field of automated logistics. The introduction of joint robots has greatly promoted the development of logistics automation, improved logistics efficiency, and reduced labor costs. The application of joint robots in the logistics industry includes unstacking, sorting, loading and unloading, etc. There are two solutions for the articulated robot to accurately grasp the cargo unit. One is the artificial initialization setting, which uses fixtures and other auxiliary tools to make the workpiece reach the designated position every time. The other is equipped with a vision system, through precise vision positioning, to achieve accurate grasping by robotic hands. With the development of science and technology and the complexity of the production environment, robots are required to complete tasks with higher precision and more complex environments. There are many types of cargo units in the supermarket industry, and the appearance and appearance of cargo units are quite different. The existing logistics equipment cannot achieve precise positioning for different stack shapes, different cargo units, etc., resulting in the existing automation equipment unable to operate. At present, academia and industry are vigorously exploring the application of artificial intelligence technology in different fields, and vision technology is also a hot spot in domestic and foreign research. In recent years, in top foreign computer vision conferences and magazines such as ECCV and CVPR, new “deep learning” network models have been continuously proposed. This project builds a deep learning network based on regression ideas and realizes image segmentation of supermarket cargo units. At the same time, the binocular vision system is combined to realize the precise positioning of the spatial coordinates of the cargo unit.

1. The advantages of vision system in the logistics industry

The vision system solution is a comprehensive project, including image processing, optical imaging, sensor technology, computer software, and hardware technology, etc. A typical vision application system includes image capture, light source system, image algorithm module, intelligent judgment and decision-making module, and mechanical control execution module. The vision system of this project combines binocular vision technology and deep learning technology, which greatly improves the universality, accuracy, and stability of the vision system.

1.1 Application of binocular vision

The application of binocular vision recognition technology can obtain the distance information of objects in three-dimensional space more conveniently, and improve the efficiency and accuracy of information acquisition. The binocular vision technology avoids the dependence on external special light sources and can accurately obtain the distance information of the object. In addition, using computer technology to analyze and process the acquired distance information of three-dimensional objects can better obtain deeper semantic information. Traditional visual technology must rely on external special light sources when acquiring information and achieve the purpose of semantic understanding through light reflection information. Therefore, traditional vision technology is not universal and cannot accurately understand the complex scenes. At the same time, binocular vision technology can solve the recognition problem in three-dimensional space and greatly expand the application range of vision technology.

The binocular vision recognition system does not require large-scale equipment, and the recognition system only consists of simple hardware. Therefore, the binocular vision system has high flexibility and strong scalability. At the same time, the advantages of computer technology can also be fully applied in the system, thereby improving the reliability of the operation of binocular vision recognition technology. The application of binocular vision recognition technology can solve problems such as difficulty in obtaining information from traditional robots, improve the scope of application of robots, and promote the intelligent development of robots.

1.2 Application of “deep learning” technology

“Deep learning” is a branch of machine learning and a general term for a class of pattern recognition methods. The deep learning structure includes a multi-layer perceptron structure with multiple hidden layers. By combining low-level features, a more abstract high-level representation attribute category or feature is formed to achieve recognition, judgment, and analysis functions. The neural network system based on convolution operation is an important direction of deep learning research. This research method has made good research in the fields of flaw detection, image recognition, text recognition (OCR), face recognition, fingerprint recognition, medical diagnosis, etc. Results. Deep learning technology enables the visual system to recognize object features like the human brain, which greatly improves the universality of the visual system. With the help of algorithm toolkits, traditional vision technology can quickly and conveniently preprocess image signals and obtain image feature information. Therefore, the introduction of deep learning technology can effectively solve application scenarios such as diverse recognition types and complex features.

2. Logistics plan

2.1 The overall logistics process

Supermarket turnover warehouse has a wide variety of cargo units, large throughput, and fast response time.

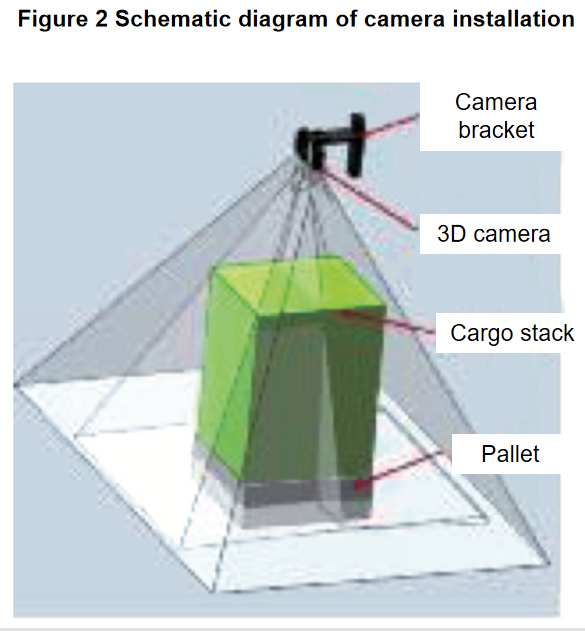

Figure 1 is a partial logistics layout of the project. The function of this part of the logistics line is to unstack and transport the cargo units cached in the warehouse area to the designated outbound area. The operation process is as follows: the upper system issues an outbound instruction, the automatic guided vehicle receives the instruction and goes to the temporary storage area to pick up the goods, and then the automatic guided vehicle transports the cargo unit from A to B, and then exits the work station; the cargo unit is in place at B After triggering the destacking system, the vision system starts to identify and locate the incoming materials, and then the articulated robot arm accurately grasps and transports the goods to the conveyor line; finally, the cargo unit follows the conveyor line to the designated outbound area. The whole operation process does not require manual intervention and realizes full automation and information operation.

2.2 Visual destacking process

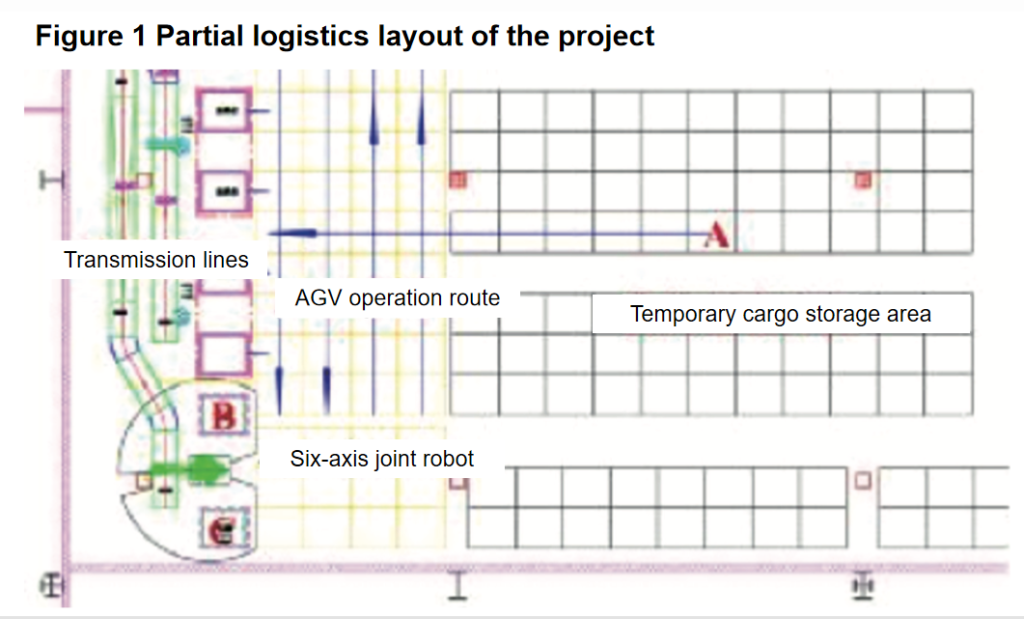

This project uses a binocular structured light camera for image acquisition. The camera is mounted on the camera stand, and the pickup device must not enter the camera’s field of view. The size of the pallet cargo is 1000×1200×1600 (mm). In order to ensure the image collection field of view, the maximum size of the camera from the pallet cargo is not less than 1140mm. In order to ensure the stability and accuracy of the system, the camera installation must be firm and reliable, and the vibration caused by the movement of the robot and the logistics equipment should not have any impact on the camera. In addition, the camera must be outside the radius of movement of the robot to ensure that there is no interference between the camera and the robot. The schematic diagram of camera installation is shown in Figure 2.

The data stream collected by the structured light binocular vision system used in this project includes RGB color images and point cloud data. The accuracy of the binocular camera is 1mm@1m (at a height of 1 meter, the accuracy is 1mm). In the scene of unstacking operations in shopping malls and supermarkets, the point cloud data collected by the imaging device is noisy, and it is difficult to achieve the purpose of noise removal through a single machine learning algorithm. Therefore, when processing three-dimensional point cloud data, it is necessary to combine the feature extraction of two-dimensional images to achieve point cloud data denoising. The task environment requires automatic and accurate capture of 1,000 kinds of cartons. Traditional vision technology cannot meet the requirements of such various types of image processing. Therefore, the project established a neural network model for image segmentation based on a deep learning model. This model can segment the carton in the image to obtain an independent mask for each carton. Mapping the mask obtained by the instance segmentation to the point cloud data can remove the noise points in the point cloud data. After obtaining the denoising point cloud data of each carton, the center coordinate of each carton can be calculated through the machine learning algorithm, and finally, the center coordinate position is fed back to the PLC of the robot to achieve precise positioning.

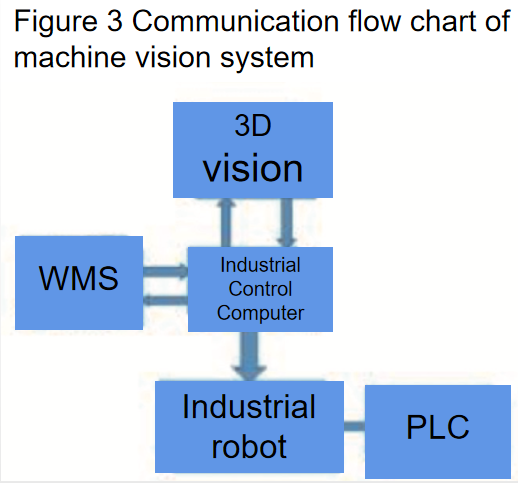

The binocular vision system cooperates with the robot to realize the automatic unstacking operation process: when the system is in place, the upper system sends instructions to the vision system, and the vision system is triggered to start image collection. The binocular vision system automatically recognizes the shape of the stack and calculates the spatial position information of the target, and sends it to the robot. The robot picks up the target carton according to the received position information of the target, and then the binocular vision system performs image collection and data calculation again. The robot moves above the conveyor line and places the target carton on the conveyor line. The robot returns to the top of the stack and picks up the target carton according to the instructions of the vision system. The system continuously loops the above steps until the task is completed. The flow chart of the machine vision system is shown in Figure 3.

3. Summary

The successful operation of the vision-guided joint robot depalletizing system has realized the function of automatic depalletizing of various types of goods in the supermarket industry, promoted the development of the logistics industry from labor-intensive industries to technology-intensive industries, and promoted the intelligent development of logistics robots. The work efficiency and quality of logistics robots are improved, and labor costs are greatly saved. In addition, the successful landing of the project also provides a better application scenario for advanced artificial intelligence technologies such as deep learning and expands the application scope of artificial intelligence technologies.